En ligne France Jeux 2025

Indice dei Contenuti Table des matières

- Si vous jouez pour la première fois, Millionz Casino vous offre le double de ce que vous avez investi

- Des centaines de livres de dizaines d’auteurs réputés

- Où puis-je trouver le casino en ligne qui paie le plus rapidement, et lequel est le plus rapide ?

- Protection des informations personnelles des utilisateurs

Si vous êtes un joueur qui préfère jouer de chez lui, vous allez adorer Millionz Casino, une toute nouvelle plateforme en ligne. Millionz Casino est un établissement de jeu en ligne autorisé qui accueille des joueurs du monde entier. Les experts chevronnés et les nouveaux venus dans le monde des casinos en ligne trouveront leur bonheur sur Millionz Casino. Les clients à la recherche d’une expérience de jeu en ligne de premier ordre peuvent trouver ce qu’ils recherchent au Millionz Casino grâce à son design de pointe, son service clientèle rapide et ses options bancaires sécurisées.

Si vous jouez pour la première fois, Millionz Casino vous offre le double de ce que vous avez investi

Dès qu’un nouveau joueur s’inscrit sur Millionz Casino, le service est excellent. L’énorme bonus de bienvenue de Millionz Casino https://vegetalid.fr/images/pgs/?millionz-casino-vous-promet-des-gains-importants-et-des-emotions-sans-fin.html peut atteindre le montant total du premier investissement d’un joueur. Et ce n’est pas tout !

Les joueurs peuvent jouer à une variété de machines à sous intéressantes avec leurs 100 tours gratuits et leur bonus de match. Profitez de cette offre pour augmenter votre bankroll et jouer à d’autres jeux fantastiques proposés par Millionz Casino. Ne laissez pas passer cette incroyable opportunité, inscrivez-vous immédiatement pour garantir votre place.

Des centaines de livres de dizaines d’auteurs réputés

Certains des meilleurs développeurs du secteur fournissent les jeux du Millionz Casino. Une grande variété de machines à sous est disponible, que vous aimiez les rouleaux mécaniques traditionnels ou les rouleaux vidéo plus modernes, c’est à vous de décider. Outre les machines à sous et les jeux de vidéo poker, le Millionz Casino propose également une variété de jeux de table, tels que le blackjack, la roulette, le baccarat et bien d’autres encore. Le vidéo poker, les cartes à gratter et d’autres jeux de hasard sont également à la disposition des joueurs.

Le Millionz Casino a travaillé avec des poids lourds de l’industrie tels que NetEnt, Betsoft et Evolution Gaming pour offrir les meilleurs jeux et les expériences de jeu les plus excitantes qui soient. Parce qu’il y a tellement de choix, chaque utilisateur est sûr de découvrir au moins un jeu qu’il aime.

Où puis-je trouver le casino en ligne qui paie le plus rapidement, et lequel est le plus rapide ?

Millionz Casino, l’un des derniers casinos en ligne, est très fier de ses paiements rapides comme l’éclair. Pour accélérer la livraison des prix, le site a simplifié la procédure de retrait. Les retraits peuvent être effectués en seulement 24 heures, ce qui est nettement plus rapide que la plupart des autres casinos en ligne.

Toutes les principales cartes de crédit, portefeuilles électroniques et crypto-monnaies sont les bienvenus sur Millionz Casino. Grâce à cette flexibilité, les utilisateurs peuvent sélectionner leurs options de dépôt et de retrait préférées. Si vous êtes à la recherche d’une expérience de jeu sans tracas, n’allez pas plus loin que Millionz Casino, grâce à ses paiements rapides et à ses méthodes bancaires sécurisées.

Protection des informations personnelles des utilisateurs

Les joueurs peuvent être certains que leurs informations personnelles seront conservées en toute sécurité sur Millionz Casino. La plateforme utilise des mesures de sécurité de pointe, telles que le cryptage SSL et des pare-feu sophistiqués, pour protéger les données privées des utilisateurs. Toutes les transactions financières sont enregistrées en cas d’enquête sur une fraude, et les informations personnelles sont cryptées pour empêcher tout accès non autorisé ou toute tentative de piratage.

Comme il opère sous une licence de jeu de Curaçao, le casino Millionz est soumis à des audits réguliers pour garantir l’équité des joueurs. Les informations saisies par les joueurs sur Millionz Casino sont protégées.

NGÓI HERA PRIME TRUNG CẤP

NGÓI HERA PRIME TRUNG CẤP

NGÓI VIGLACERA S

NGÓI VIGLACERA S NGÓI PHẲNG VIGLACERA

NGÓI PHẲNG VIGLACERA

NGÓI NAKAMURA SÓNG NHỎ

NGÓI NAKAMURA SÓNG NHỎ NGÓI NAKAMURA SÓNG LỚN

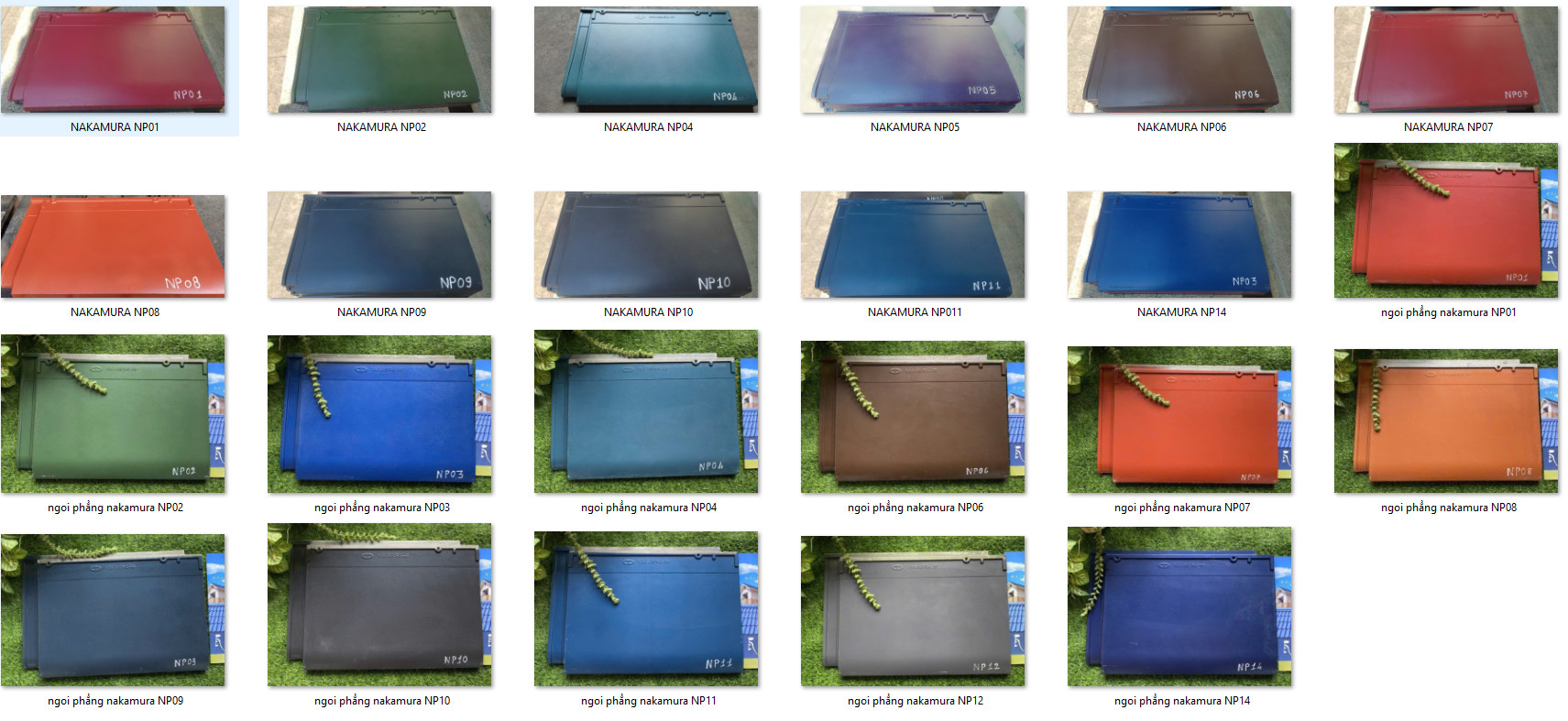

NGÓI NAKAMURA SÓNG LỚN NGÓI PHẲNG NAKAMURA

NGÓI PHẲNG NAKAMURA

GẠCH BÔNG GIÓ19X19

GẠCH BÔNG GIÓ19X19

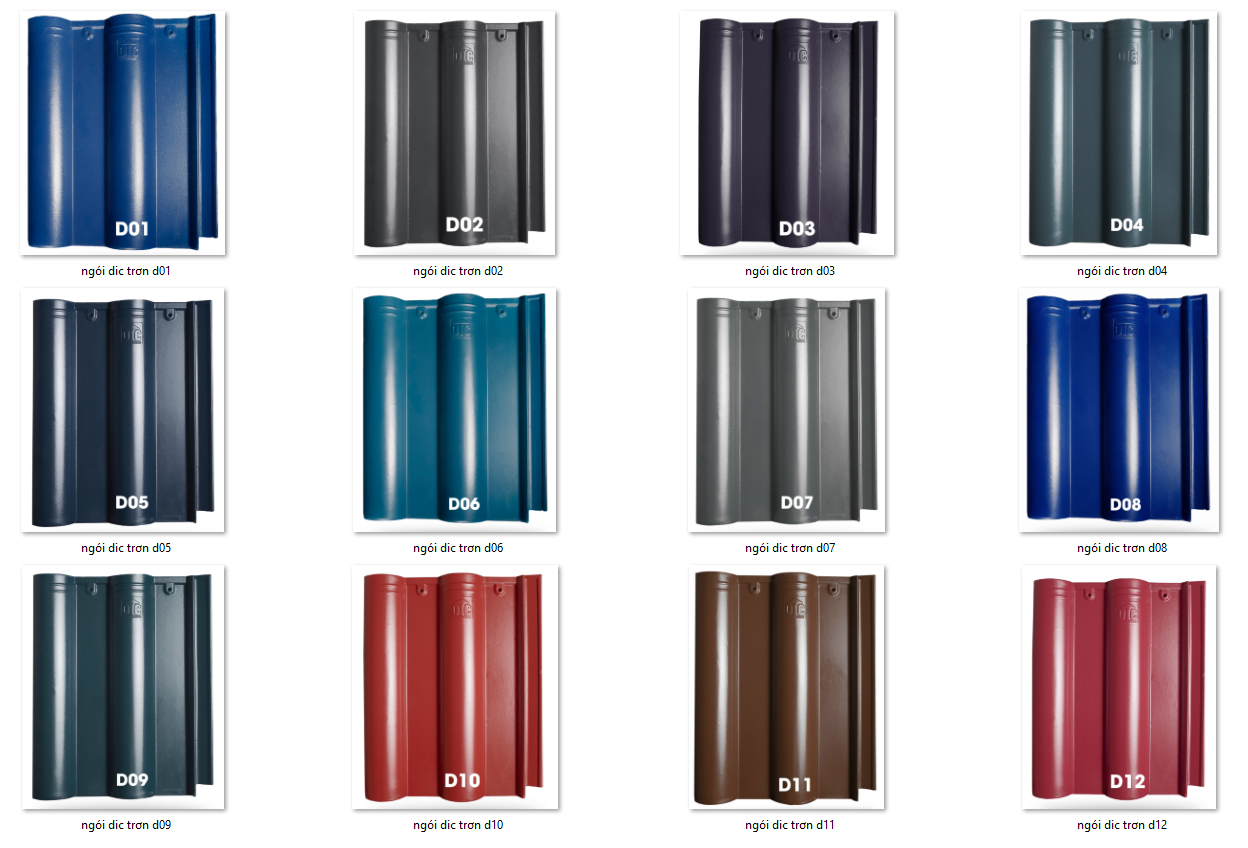

NGÓI RUBY SÓNG LỚN

NGÓI RUBY SÓNG LỚN