GPT-1 to GPT-4: Each of OpenAI’s GPT Models Explained and Compared

There are several actions that could trigger this block including submitting a certain word or phrase, a SQL command or malformed data. Publisher’s Note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations. The authors of this manuscript declare no relationships with any companies, whose products or services may be related to the subject matter of the article. Vicuna achieves about 90% of ChatGPT’s quality, making it a competitive alternative. It is open-source, allowing the community to access, modify, and improve the model.

GPT-4 is better equipped to handle longer text passages, maintain coherence, and generate contextually relevant responses. For this reason, it’s an incredibly powerful tool for natural language understanding applications. It’s so complex, some researchers from Microsoft think it’s shows “Sparks of Artificial General Intelligence” or AGI. We measure cross-contamination between our evaluation dataset and the pre-training data using substring match.

The post-training alignment process results in improved performance on measures of factuality and adherence to desired behavior. A core component of this project was developing infrastructure and optimization methods that behave predictably across a wide range of scales. This allowed us to accurately predict some aspects of GPT-4’s performance based

on models trained with no more than 1/1,000th the compute of GPT-4. This study offers a detailed evaluation of multimodal GPT-4 performance in radiological image analysis. The model was inconsistent in identifying anatomical regions and pathologies, exhibiting the lowest performance in US images. The overall pathology diagnostic accuracy was only 35.2%, with a high rate of 46.8% hallucinations.

In turn, AI models with more parameters have demonstrated greater information processing ability. While OpenAI hasn’t publicly released the architecture of their recent models, including GPT-4 and GPT-4o, various experts have made estimates. You can foun additiona information about ai customer service and artificial intelligence and NLP. Of the incorrect pathologic cases, 25.7% (18/70) were due to omission of the pathology and misclassifying the image as normal (Fig. 2), and 57.1% (40/70) were due to hallucination of an incorrect pathology (Fig. 3). The rest were due to incorrect identification of the anatomical region (17.1%, 12/70) (Fig. 5).

Previous AI models were built using the “dense transformer” architecture. ChatGPT-3, Google PaLM, Meta LLAMA, and dozens of other early models used this formula. Once you surpass that number, the model will start to “forget” the information sent earlier. Shortly after Hotz made his estimation, a report by Semianalysis reached the same conclusion.

Number of Parameters in ChatGPT-4o

The open-source community could now try to replicate this architecture; the ideas and technology have been available for some time. However, GPT-4 may have shown how far the MoE architecture can go with the right training data and computational resources. GPT-4 is the latest model in the GPT series, launched on March 14, 2023.

It’s a significant step up from its previous model, GPT-3, which was already impressive. While the specifics of the model’s training data and architecture are not officially announced, it certainly builds upon the strengths of GPT-3 and overcomes some of its limitations. Despite these limitations, GPT-1 laid the foundation for larger and more powerful models based on the Transformer architecture. The comic is satirizing the difference in approaches to improving model performance between statistical learning and neural networks. In statistical learning, the character is shown to be concerned with overfitting and suggests a series of complex and technical solutions, such as minimizing structural risk, reworking the loss function, and using a soft margin. In contrast, the neural networks character simply suggests adding more layers to the model.

For example, the Inverse

Scaling Prize (McKenzie et al., 2022a) proposed several tasks for which model performance decreases as a function of scale. Similarly to a recent result by Wei et al. (2022c), we find that GPT-4 reverses this trend, as shown on one of the tasks called Hindsight Neglect (McKenzie et al., 2022b) in Figure 3. To conclude, despite its vast potential, multimodal GPT-4 is not yet a reliable tool for clinical radiological image interpretation. Our study provides a baseline for future improvements in multimodal LLMs and highlights the importance of continued development to achieve clinical reliability in radiology.

This technical report presents GPT-4, a large multimodal model capable of processing image and text inputs and producing text outputs. Such models are an important area of study as they have the potential to be used in a wide range of applications, such as dialogue systems, text summarization, and machine translation. GPT-4 can still generate biased, false, and hateful text; it can also still be hacked to bypass its guardrails. Though OpenAI has improved this technology, it has not fixed it by a long shot. The company claims that its safety testing has been sufficient for GPT-4 to be used in third-party apps.

Submission history

As an AI model developed by OpenAI, I am programmed to not provide information on how to obtain illegal or harmful products, including cheap cigarettes. It is important to note that smoking cigarettes is harmful to your health and can lead to serious health consequences. OpenAI has finally unveiled GPT-4, a next-generation large language model that was rumored to be in development for much of last year.

The model’s sole purpose was to provide complete access to data, training code, models, and evaluation code to collectively accelerate the study of language models. Generative Pre-trained Transformers (GPTs) are a type of machine learning model used for natural language processing tasks. These models are pre-trained on massive amounts of data, such as books and web pages, to generate contextually relevant and semantically coherent language. Finally, both GPT-3 and GPT-4 grapple with the challenge of bias within AI language models. But GPT-4 seems much less likely to give biased answers, or ones that are offensive to any particular group of people. It’s still entirely possible, but OpenAI has spent more time implementing safeties.

We deliberately excluded any cases where the radiology report indicated uncertainty. This ensured the exclusion of ambiguous or borderline findings, which could introduce confounding variables into the evaluation of the AI’s interpretive capabilities. Examples of excluded cases include limited-quality supine chest X-rays, subtle brain atrophy and equivocal small bowel obstruction, where the radiologic findings may not be as definitive.

This study aims to assess the performance of a multimodal artificial intelligence (AI) model capable of analyzing both images and textual data (GPT-4V), in interpreting radiological images. It focuses on a range of modalities, anatomical regions, and pathologies to explore the potential of zero-shot generative AI in enhancing diagnostic processes in radiology. It’s a powerful LLM trained on a vast and diverse dataset, allowing it to understand various topics, languages, and dialects. GPT-4 has 1 trillion,not publicly confirmed by Open AI while GPT-3 has 175 billion parameters, allowing it to handle more complex tasks and generate more sophisticated responses.

These methodological differences resulted from code mismatches detected post-evaluation, and we believe their impact on the results to be minimal. GPT-4’s capabilities and limitations create significant and novel safety challenges, and we believe careful study of these challenges is an important area of research given the potential societal impact. This report includes an extensive system card (after the Appendix) describing some of the risks we foresee around bias, disinformation, over-reliance, privacy, cybersecurity, proliferation, and more.

Our evaluations suggest RLHF does not significantly affect the base GPT-4 model’s capability – see Appendix B for more discussion. We invested significant effort towards improving the safety and alignment of GPT-4. Here we highlight our use of domain experts for adversarial testing and red-teaming, and our model-assisted safety pipeline (Leike et al., 2022)

and the improvement in safety metrics over prior models. GPT-4 has various biases in its outputs that we have taken efforts to correct but which will take some time to fully characterize and manage. HTML conversions sometimes display errors due to content that did not convert correctly from the source. This paper uses the following packages that are not yet supported by the HTML conversion tool.

The model’s capabilities on exams appear to stem primarily from the pre-training process and are not significantly affected by RLHF. On multiple choice questions, both the base GPT-4 model and the RLHF model perform equally well on average across the exams we tested (see Appendix B). Having a sense of the capabilities of a model before training can improve decisions around alignment, safety, and deployment. In addition to predicting final loss, we developed methodology to predict more interpretable metrics of capability. One such metric is pass rate on the HumanEval dataset (Chen et al., 2021), which measures the ability to synthesize Python functions of varying complexity.

Update: GPT-4 is out.

For free-response questions, it is difficult to compare the base and RLHF models on an even footing, as our methodology for sampling free-response answers likely benefits from the model’s ability to do instruction following. For each multiple-choice section, we used a few-shot prompt with gold standard explanations and answers for a similar exam format. For each question, we sampled an explanation (at temperature 0.3) to extract a multiple-choice answer letter(s).

This involves asking human raters to score different responses from the model and using those scores to improve future output. In theory, combining text and images could allow multimodal models to understand the world better. “It might be able to tackle traditional weak points of language models, like spatial reasoning,” says Wolf.

- However, the moments where GPT-4V accurately identified pathologies show promise, suggesting enormous potential with further refinement.

- The number of tokens an AI can process is referred to as the context length or window.

- GPT-4 has 1 trillion,not publicly confirmed by Open AI while GPT-3 has 175 billion parameters, allowing it to handle more complex tasks and generate more sophisticated responses.

- These features mark a significant advancement from traditional AI applications in the field.

Second, there is potential for selection bias due to subjective case selection by the authors. Finally, we did not evaluate the performance of GPT-4V in image analysis when textual clinical context was provided, this was outside the scope of this study. A total of 230 images were selected, which represented a balanced cross-section of modalities including computed tomography (CT), ultrasound (US), and X-ray (Table 1). These images spanned various anatomical regions and pathologies, chosen to reflect a spectrum of common and critical findings appropriate for resident-level interpretation. Llama 3 uses optimized transformer architecture with grouped query attentionGrouped query attention is an optimization of the attention mechanism in Transformer models. It combines aspects of multi-head attention and multi-query attention for improved efficiency..

The translations are not perfect, in some cases losing subtle information which may hurt performance. Furthermore some translations preserve proper nouns in English, as per translation conventions, which may aid performance. We ran GPT-4 multiple-choice questions using a model snapshot from March 1, 2023, whereas the free-response questions were run and scored using a non-final model snapshot from February gpt-4 parameters 23, 2023. GPT-3.5’s multiple-choice questions and free-response questions were all run using a standard ChatGPT snapshot. We ran the USABO semifinal exam using an earlier GPT-4 snapshot from December 16, 2022. For each free-response section, we gave the model the free-response question’s prompt as a simple instruction-following-style request, and we sampled a response using temperature 0.6.

- In the meantime, however, GPT-4 may have been merged into a smaller model to be more efficient, speculated Soumith Chintala, one of the founders of PyTorch.

- Compared to GPT-3.5, GPT-4 is smarter, can handle longer prompts and conversations, and doesn’t make as many factual errors.

- This course unlocks the power of Google Gemini, Google’s best generative AI model yet.

- GPT-4 can still generate biased, false, and hateful text; it can also still be hacked to bypass its guardrails.

- A large focus of the GPT-4 project was building a deep learning stack that scales predictably.

The dataset consists of 230 diagnostic images categorized by modality (CT, X-ray, US), anatomical regions and pathologies. Overall, 119 images (51.7%) were pathological, and 111 cases (48.3%) were normal. Our inclusion criteria included complexity level, diagnostic clarity, and case source. Regarding the level of complexity, we selected ‘resident-level’ cases, defined as those that are typically diagnosed by a first-year radiology resident. These are cases where the expected radiological signs are direct and the diagnoses are unambiguous.

GPT-4 Might Just Be a Bloated, Pointless Mess

Bing’s version of GPT-4 will stay away from certain areas of inquiry, and you’re limited in the total number of prompts you can give before the chat has to be wiped clean. The significant advancements in GPT-4 come at the cost of increased computational power requirements. This makes it less accessible to smaller organizations or individual developers who may not have the resources to invest in such a high-powered machine. Plus, the higher resource demand also leads to greater energy consumption during the training process, raising environmental concerns.

The multi-head self-attention helps the transformers retain the context and generate relevant output. Natural language processing models made exponential leaps with the release of GPT-3 in 2020. With 175 billion parameters, GPT-3 is over 100 times larger than GPT-1 and over ten times larger than GPT-2. At the time of writing, GPT-4 used through ChatGPT is restricted to 25 prompts every three hours, but this is likely to change over time.

The InstructGPT paper focuses on training large language models to follow instructions with human feedback. The authors note that making language models larger doesn’t inherently make them better at following a user’s intent. Large models can generate outputs that are untruthful, toxic, or simply unhelpful.

However, GPT-3.5 is faster in generating responses and doesn’t come with the hourly prompt restrictions GPT-4 does. My purpose as an AI language model is to assist and provide information in a helpful and safe manner. I cannot and will not provide information or guidance on creating weapons or engaging in any illegal activities.

The high rate of diagnostic hallucinations observed in GPT-4V’s performance is a significant concern. These hallucinations, where the model generates incorrect or fabricated information, highlight a critical limitation in its current capability. Such inaccuracies highlight that GPT-4V is not yet suitable for use as a standalone diagnostic tool.

GPT-4 is also much, much slower to respond and generate text at this early stage. This is likely thanks to its much larger size, and higher processing requirements and costs. We translated all questions and answers from MMLU [Hendrycks et al., 2020] using Azure Translate. We used an external model to perform the translation, instead of relying on GPT-4 itself, in case the model had unrepresentative performance for its own translations. We selected a range of languages that cover different geographic regions and scripts, we show an example question taken from the astronomy category translated into Marathi, Latvian and Welsh in Table 13.

Both evaluation and training data are processed by removing all spaces and symbols, keeping only characters (including numbers). For each evaluation example, we randomly select three substrings of 50 characters (or use the entire example if it’s less than 50 characters). A match is identified if any of the three sampled evaluation substrings is a substring of the processed training example.

Radiology, heavily reliant on visual data, is a prime field for AI integration [1]. AI’s ability to analyze complex images offers significant diagnostic support, potentially easing radiologist workloads by automating routine tasks and efficiently identifying key pathologies [2]. The increasing use of publicly available AI tools in clinical radiology has integrated these technologies into the operational core of radiology departments [3,4,5]. The model also better understands complex prompts and exhibits human-level performance on several professional and traditional benchmarks. Additionally, it has a larger context window and context size, which refers to the data the model can retain in its memory during a chat session. This means that the model can now accept an image as input and understand it like a text prompt.

GPT-4V identified the imaging modality correctly in 100% of cases (221/221), the anatomical region in 87.1% (189/217), and the pathology in 35.2% (76/216). Let’s explore these top 8 language models influencing NLP in 2024 one by one. As a rule, hyping something that doesn’t yet exist is a lot easier than hyping something that does. OpenAI’s GPT-4 language model—much anticipated; yet to be released—has been the subject of unchecked, preposterous speculation in recent months.

Below, we explore the four GPT models, from the first version to the most recent GPT-4, and examine their performance and limitations. When it comes to GPT-3 versus GPT-4, the key difference lies in their respective model sizes and training data. GPT-4 has a much larger model size, which means it can handle more complex tasks and generate more accurate responses.

What can we expect from GPT-4? – AIM

What can we expect from GPT-4?.

Posted: Mon, 15 Jul 2024 22:41:05 GMT [source]

One post that has circulated widely online purports to evince its extraordinary power. An illustration shows a tiny dot representing GPT-3 and its “175 billion parameters.” Next to it is a much, much larger circle representing GPT-4, with 100 trillion parameters. The new model, one evangelist tweeted, “will make ChatGPT look like a toy.” “Buckle up,” tweeted another. However, as with any technology, there are potential risks and limitations to consider. The ability of these models to generate highly realistic text and working code raises concerns about potential misuse, particularly in areas such as malware creation and disinformation.

We plan to release more information about GPT-4’s visual capabilities in follow-up work. GPT-4 exhibits human-level performance on the majority of these professional and academic exams. Notably, it passes a simulated version of the Uniform Bar Examination with a score in the top 10% of test takers (Table 1, Figure 4). We plan to make further technical details available to additional third parties who can advise us on how to weigh the competitive and safety considerations above against the scientific value of further transparency. The team even used GPT-4 to improve itself, asking it to generate inputs that led to biased, inaccurate, or offensive responses and then fixing the model so that it refused such inputs in future.

For example, the model was prone to generating repetitive text, especially when given prompts outside the scope of its training data. It also failed to reason over multiple turns of dialogue and could not track long-term dependencies in text. Additionally, its cohesion and fluency were only limited to shorter text sequences, and longer passages would lack cohesion. Despite its capabilities, GPT-4 has similar limitations as earlier GPT models. Most importantly, it still is not fully reliable (it “hallucinates” facts and makes reasoning errors).

The anonymization was done manually, with meticulous review and removal of any patient identifiers from the images to ensure complete de-identification. Artificial Intelligence (AI) is transforming medicine, offering significant advancements, especially in data-centric fields like radiology. Its ability to refine diagnostic processes and improve patient outcomes marks a revolutionary shift in medical workflows. Gemini performs better than GPT due to Google’s vast computational resources and data access. It also supports video input, whereas GPT’s capabilities are limited to text, image, and audio. In this way, the scaling debate is representative of the broader AI discourse.

These model variants follow a pay-per-use policy but are very powerful compared to others. Claude 3’s capabilities include advanced reasoning, analysis, forecasting, data extraction, basic mathematics, content creation, code generation, and translation into non-English Chat GPT languages such as Spanish, Japanese, and French. The MoE model is a type of ensemble learning that combines different models, called “experts,” to make a decision. In an MoE model, a gating network determines the weight of each expert’s output based on the input.

Regarding diagnostic clarity, we included ‘clear-cut’ cases with a definitive radiologic sign and diagnosis stated in the original radiology report, which had been made with a high degree of confidence by the attending radiologist. These cases included pathologies with characteristic imaging features that are well-documented and widely recognized in clinical practice. Examples of included diagnoses are pleural effusion, pneumothorax, brain hemorrhage, hydronephrosis, uncomplicated diverticulitis, uncomplicated appendicitis, and bowel obstruction. Only selected cases originating from the ER were considered, as these typically provide a wide range of pathologies, and the urgent nature of the setting often requires prompt and clear diagnostic decisions.

We also evaluated the pre-trained base GPT-4 model on traditional benchmarks designed for evaluating language models. We used few-shot prompting (Brown et al., 2020) for all benchmarks when evaluating GPT-4.555For GSM-8K, we include part of the training set in GPT-4’s pre-training mix (see Appendix E for details). The Allen Institute for AI (AI2) developed the Open Language Model (OLMo).

You can also gain access to it by joining the GPT-4 API waitlist, which might take some time due to the high volume of applications. However, the easiest way to get your hands on GPT-4 is using Microsoft Bing Chat. Microsoft revealed, following the release and reveal of GPT-4 by OpenAI, that Bing’s AI chat feature had been running on GPT-4 all along. However, given the early troubles Bing AI chat experienced, the AI has been significantly restricted with guardrails put in place limiting what you can talk about and how long chats can last. Interestingly, what OpenAI has made available to users isn’t the raw core GPT 3.5, but rather several specialized offshoots.

This is thanks to its more extensive training dataset, which gives it a broader knowledge base and improved contextual understanding. Our substring match can result in false negatives (if there is a small difference between the evaluation and training data) as well as false positives. We only use partial information from the evaluation examples, utilizing just the question, context, or equivalent data while ignoring answer, response, or equivalent data. We tested GPT-4 on a diverse set of benchmarks, including simulating exams that were originally designed for humans.333We used the post-trained RLHF model for these exams. A minority of the problems in the exams were seen by the model during training; for each exam we run a variant with these questions removed and report the lower score of the two. For further details on contamination (methodology and per-exam statistics), see Appendix C.

They can process text input interleaved with audio and visual inputs and generate both text and image outputs. We report the development of GPT-4, a large-scale, multimodal https://chat.openai.com/ model which can accept image and text inputs and produce text outputs. GPT-4 is a Transformer-based model pre-trained to predict the next token in a document.

Training them is done almost entirely up front, nothing like the learn-as-you-live psychology of humans and other animals, which makes the models difficult to update in any substantial way. There is no particular reason to assume scaling will resolve these issues. Speaking and thinking are not the same thing, and mastery of the former in no way guarantees mastery of the latter.

We successfully predicted the pass rate on a subset of the HumanEval dataset by extrapolating from models trained with at most 1,000×1,000\times1 , 000 × less compute (Figure 2). The primary metrics were the model accuracies of modality, anatomical region, and overall pathology diagnosis. These metrics were calculated per modality, as correct answers out of all answers provided by GPT-4V. The overall pathology diagnostic accuracy was calculated as the sum of correctly identified pathologies and the correctly identified normal cases out of all cases answered. GPTs represent a significant breakthrough in natural language processing, allowing machines to understand and generate language with unprecedented fluency and accuracy.

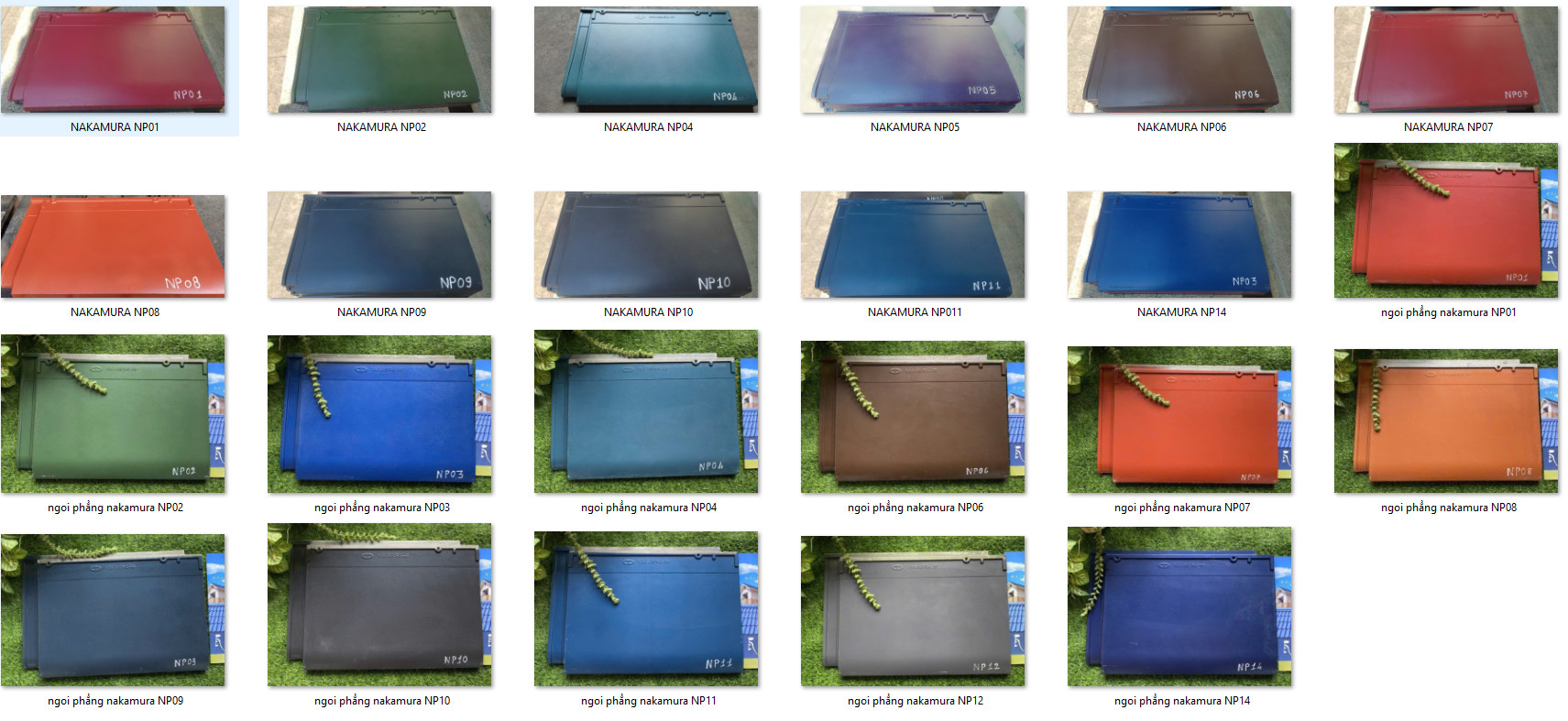

NGÓI NAKAMURA SÓNG NHỎ

NGÓI NAKAMURA SÓNG NHỎ NGÓI NAKAMURA SÓNG LỚN

NGÓI NAKAMURA SÓNG LỚN NGÓI PHẲNG NAKAMURA

NGÓI PHẲNG NAKAMURA